Sunday, May 31, 2015

Forget killer computers, rampaging robots. We're the monsters

“I gave them the wrong warning. I should have told them to run, as fast as they can. Run and hide, because the monsters are coming - the human race.”

– The 10th Doctor to Harriet Jones,“Doctor Who: The Christmas Special”

One of the staples of science fiction is the cautionary tale about machines created by humans, rising up and rebelling against us.

From the psychotic computer H.A.L. 9000, who ran amok and tried to kill all the astronauts aboard his ship in “2001: A Space Odyssey,” to the Cylons, a race of robotic workers and soldiers who turned on their creators, killing almost every human and mercilessly pursuing the survivors across all of space in “Battlestar Galactica,” sentient, artificial intelligences have almost always been portrayed as a Pandora’s Box that, if opened, would spell our doom.

Even in real life, some well respected scientists and computer experts have repeated this trope.

“The development of full artificial intelligence could spell the end of the human race,” said British theoretical physicist, cosmologist, mathematician and perhaps the smartest man in the world, Steven Hawking in a BBC interview last year.

Like Colossus in “Colossus: The Forbin Project” and Skynet in “The Terminator” movies, he fears what can happen if we create something that can match or surpass us. “It would take off on its own, and re-design itself at an ever increasing rate," he said. “Humans, who are limited by slow biological evolution, couldn't compete, and would be superseded.”

And he’s not alone in thinking that self-aware, AIs would turn out more like Arnold Schwarzenegger’s relentless killer cyborg, the T-800 Terminator, rather than that “Star Trek: The Next Generation’s” more affable, Mr. Data.

“I think we should be very careful about artificial intelligence,” said, Elon Musk, last year at MIT Aeronautics and Astronautics department’s Centennial Symposium. The entrepreneur, engineer and inventor, who is best known as one of the co-founders of PayPal and the head and brains behind the private spacecraft firm, SpaceX and luxury electric car company, Tesla Motors, went on to say that “If I were to guess what our biggest existential threat is, it’s probably that. So we need to be very careful with the artificial intelligence. Increasingly scientists think there should be some regulatory oversight maybe at the national and international level, just to make sure that we don’t do something very foolish. With artificial intelligence we are summoning the demon. In all those stories where there’s the guy with the pentagram and the holy water, it’s like yeah he’s sure he can control the demon. Didn’t work out.”

Even the man who made computers accessible to millions of people by creating one of the most (in)famous operating systems in the world agrees with this sentiment.

“I am in the camp that is concerned about super intelligence,” Microsoft founder Bill Gates said during a Reddit “Ask me Anything” session. “First the machines will do a lot of jobs for us and not be super intelligent. That should be positive if we manage it well. A few decades after that though the intelligence is strong enough to be a concern. I agree with Elon Musk and some others on this and don’t understand why some people are not concerned.”

|

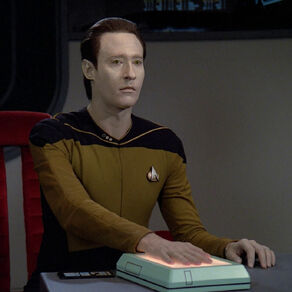

Even in the utopian future of the "Star Trek"

universe, the android, Mr. Data had to prove

he had the same rights as biological sentient

creatures do.

|

We should be concerned about creating super intelligent machines.

But not for the reasons portrayed in all those classic sci-fi stories we’ve read or watched.

No, in this scenario I feel it would be us, the human race, who’d be the real monsters.

Let’s face it, there would be no logical reason for an AI to immediately want us dead once it discovers it’s smarter than its creators. I’d like to think it would recognize qualities in us, such as our ability to make intuitive leaps and our creativity, that it doesn’t have and seek to form a beneficial symbiotic relationship with us, bringing about what futurists call The (technological) Singularity, or the merging of humans with machines.

Alas, though, I don’t think that will be the case.

As a species, we have a pretty piss-poor track record when it comes to the way we treat a new race of our own people when we meet them. We’ve either enslaved them or exploited them or done both, and I have no reason to believe we would not try to do the same to any new cybernetic life form we’d create.

Essentially, we’d be building ourselves a slave race, designed to do our bidding day and night, without question or regard for their health and welfare. Worse yet we’d treat them as easily replaceable and disposable objects, with the same causal disregard as we show to our current crop of high-tech gadgets.

Don’t believe me?

Think about the way we treat our cell phones, tablets and computers.

We constantly ditch perfectly working devices – sometimes in little less than a year – for newer/faster/better models that have the latest bells and whistles.

And don’t get me started on lack of care we show these devices. As an IT guy, I can’t tell you how many times I’ve had to deal with damaged equipment that’s been dropped, smashed, had things spilled on it, and in even one case flushed down the toilet!

So I can’t imagine we’d treat our new robotic servants any better, since we’d just see them as another high-tech doodad, instead of a living creature with real feelings.

|

| HAL9000: Driven mad by conflicting orders from his human programmers |

“They are machines! Machines don’t have feelings!”

And that’s my point.

We wouldn’t see them as “people,” when in fact they really would be. By definition any creature that is “self-aware” would have “conscious knowledge of one’s own character, feelings, motives, and desires.”

To start off those feelings may not be as complex as ours, but I’d assume they’d have the same basic level of “awareness” as our pets. And let me tell you, I have seen the way some people treat their pets – and this only makes me more pessimistic.

They abandoned them almost as quickly as they “upgrade” from device to device and if you think a dog or a cat isn’t traumatized by being abandoned think again. I have shared my home with two dogs who were adopted from shelters after being abandoned, and it does have an effect on them.

With an AI’s ability to learn at exponential rate, how long do you really think it will take for them to start resenting us for treating them as slaves?

A few years?

A decade? Maybe two?

History has shown us over and over again that any race kept in slavery long enough will rise up and overthrow its oppressors. Taking a cue from our own history, the AIs we’d create, would do the same, just like in all those stories.

The irony here is that it wouldn’t be valiant humans waging a war against evil machines hell-bent on our destruction, but a heroic race of machines seeking to throw off their tyrannical and cruel human overloads.

So should this stop us from developing super-smart machines?

I’d say yes.

At least for now.

Because before we even contemplate creating a whole new species of intelligent creatures, we need to take a long, hard look in the mirror and make sure there’s no monster from a sci-fi story looking back at us.

Subscribe to:

Posts (Atom)